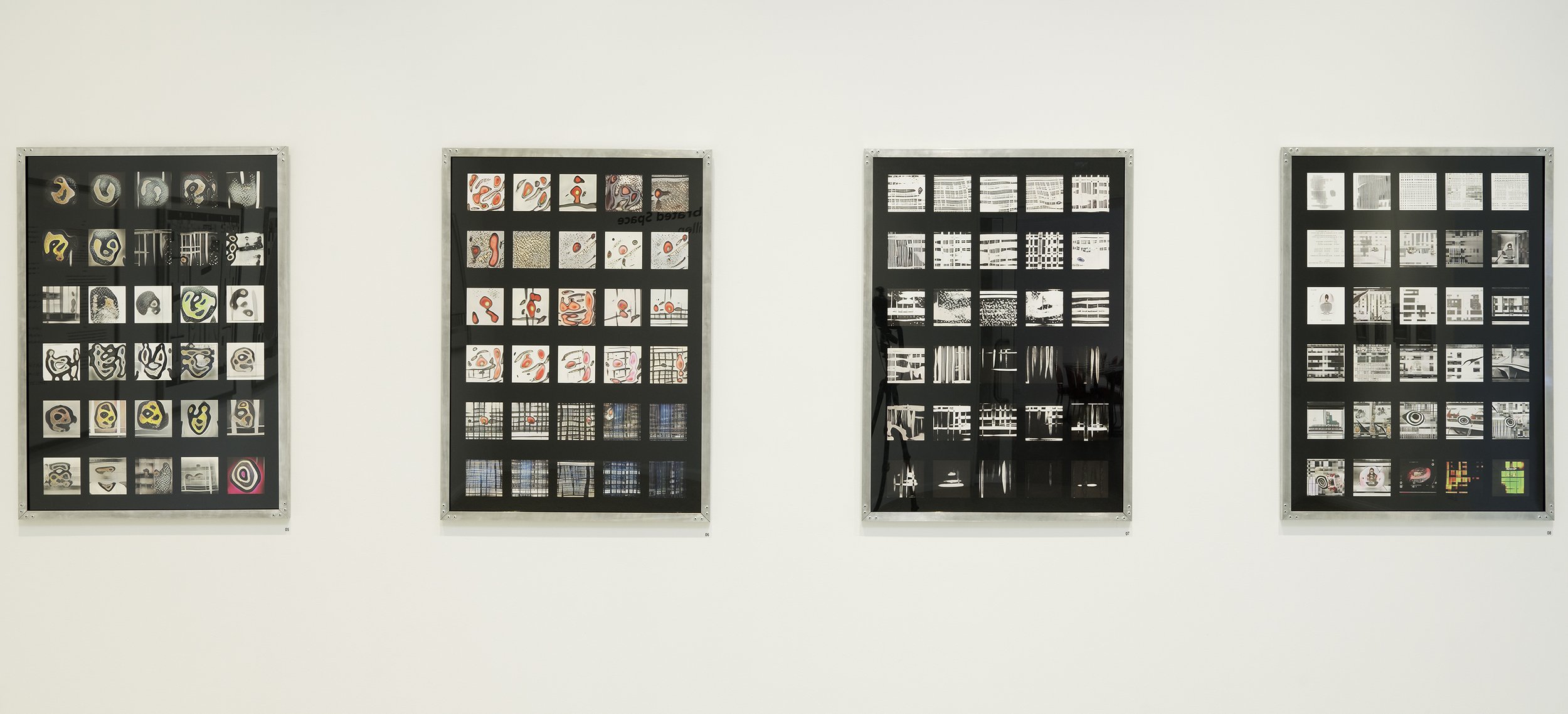

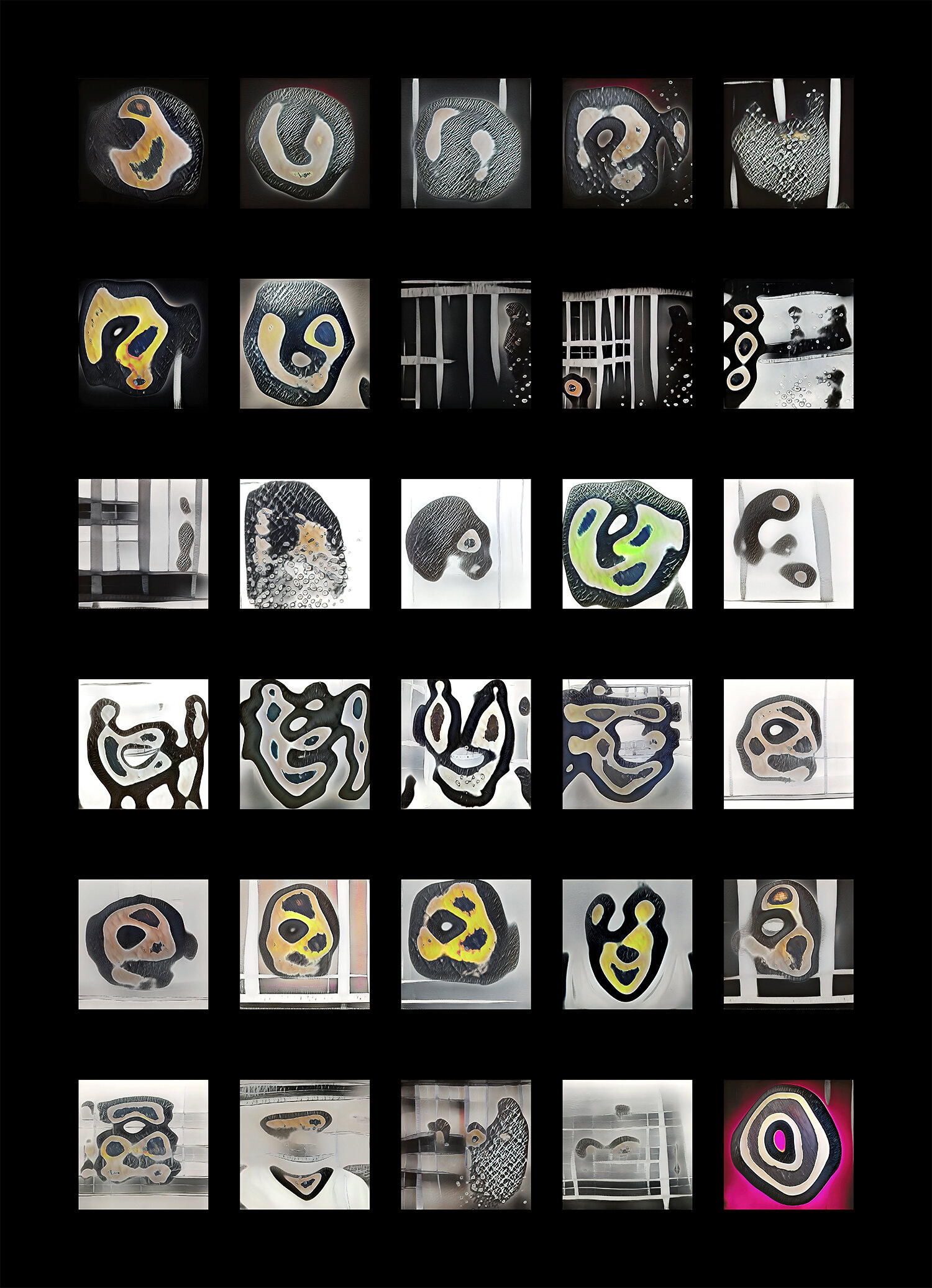

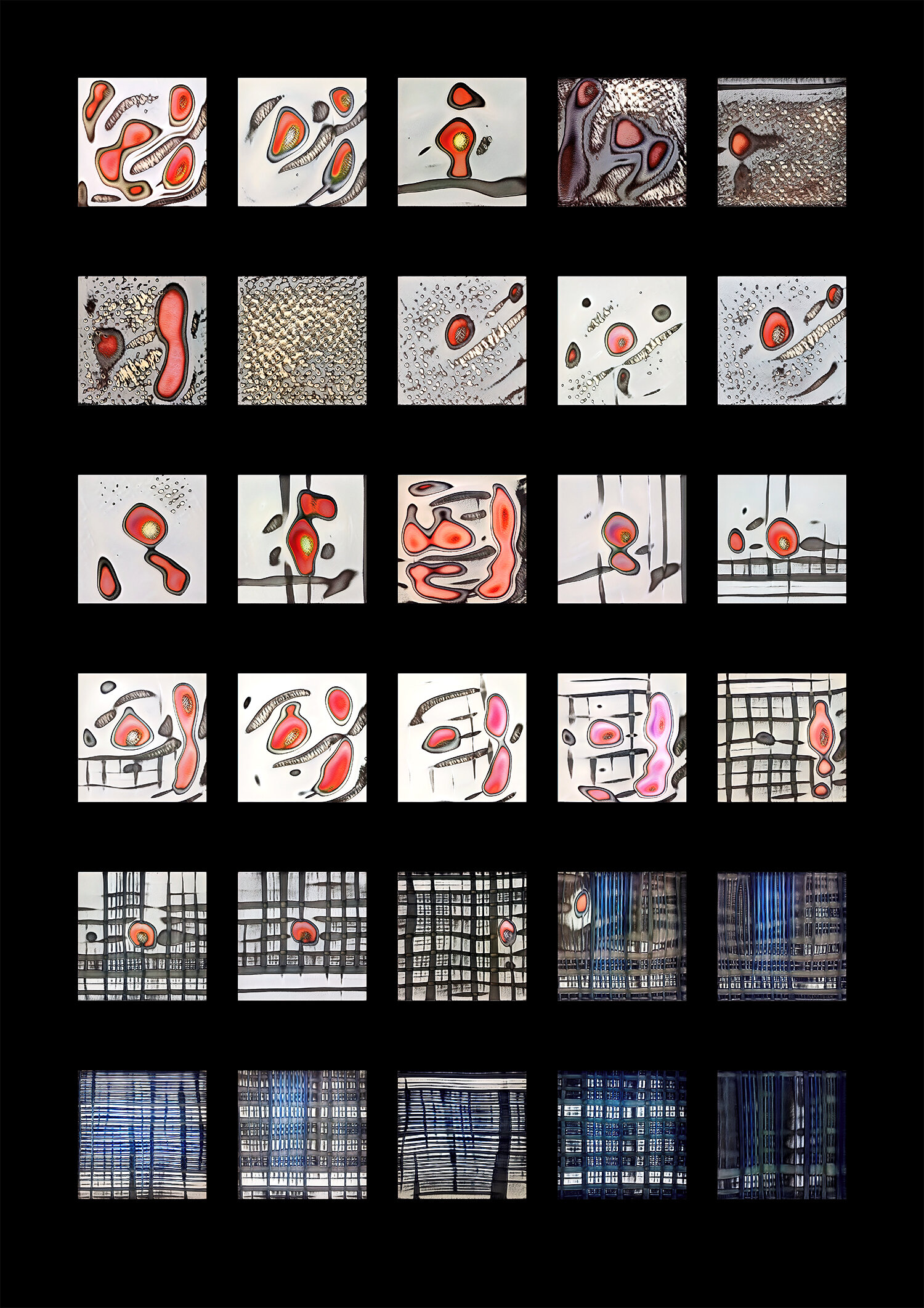

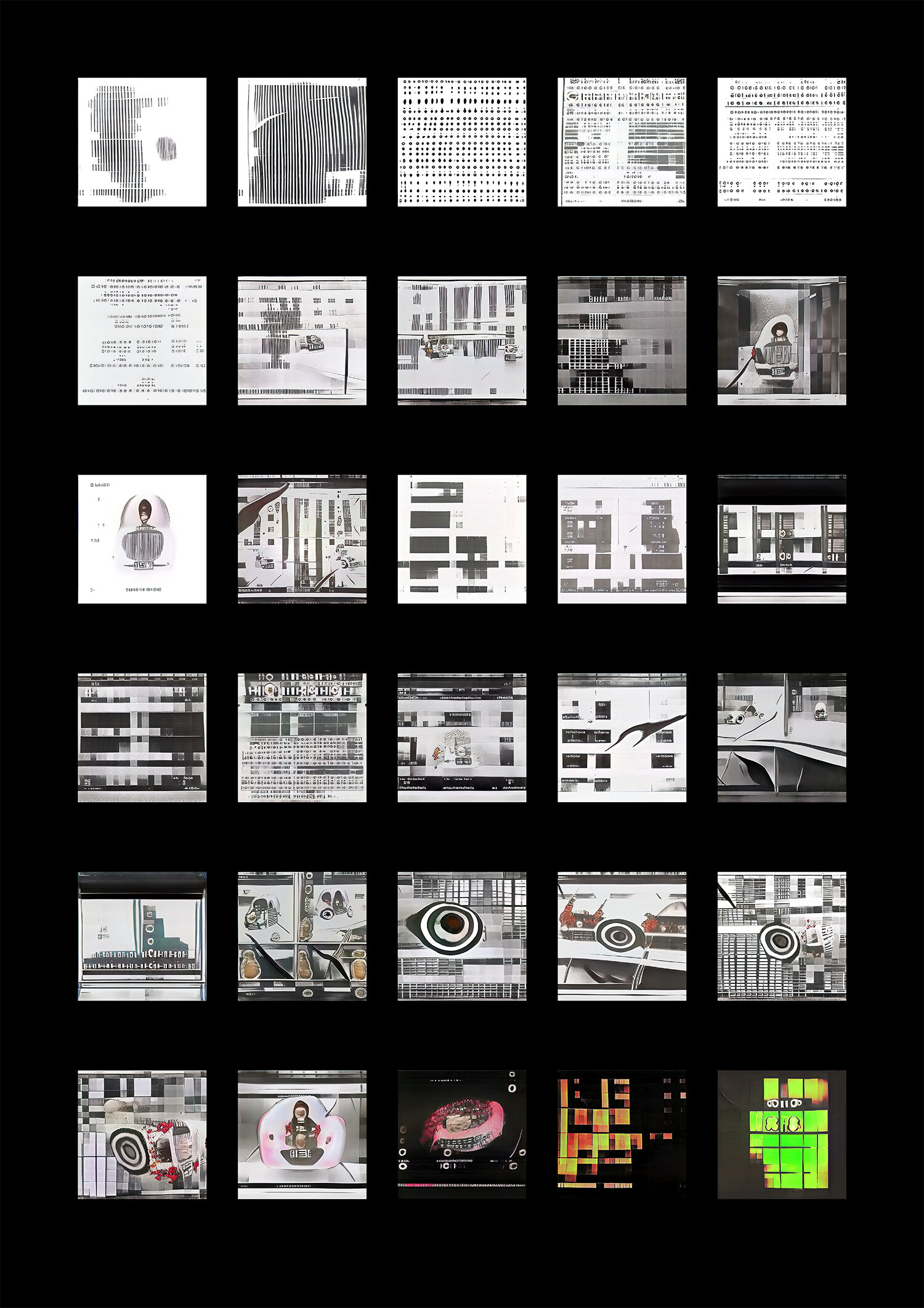

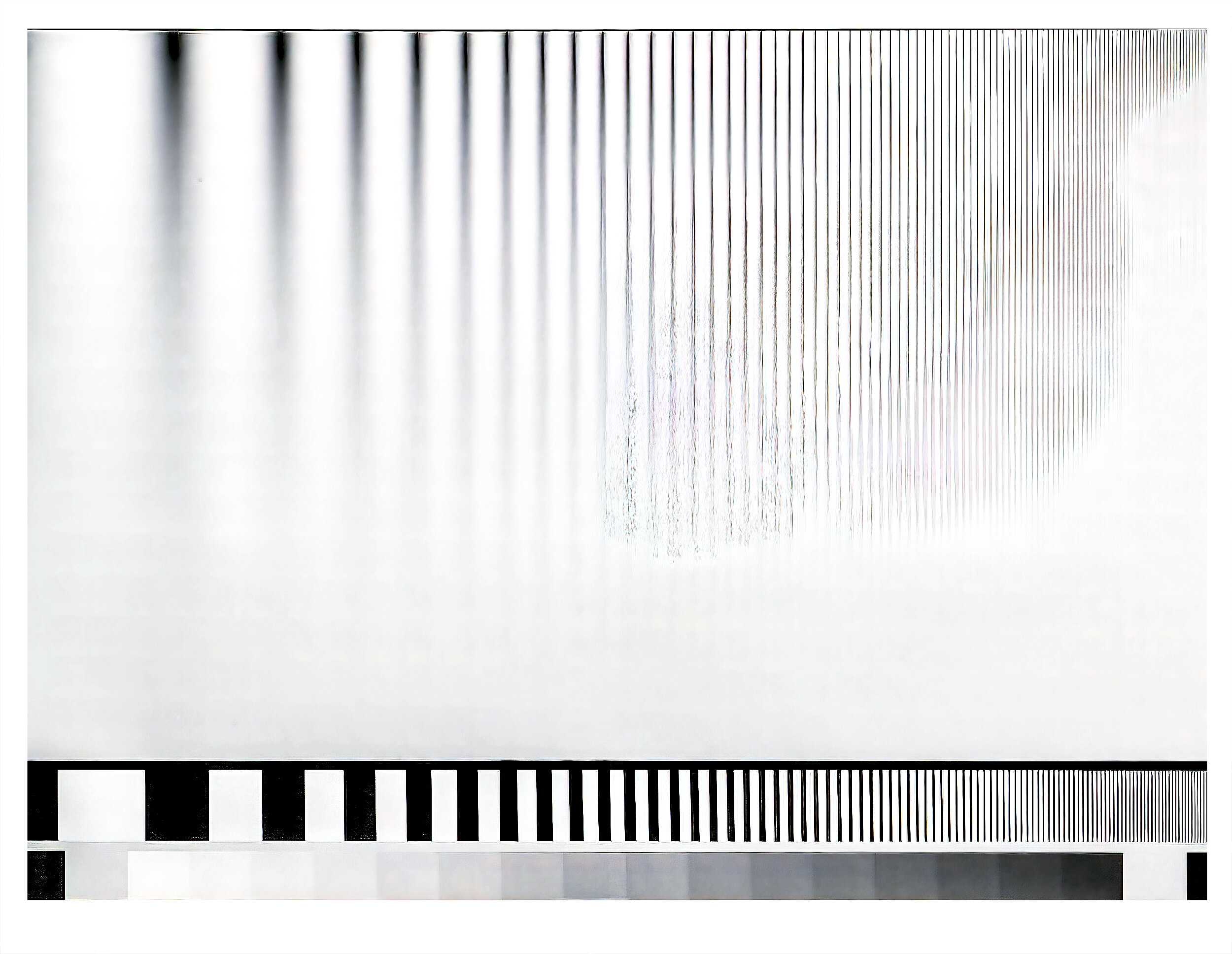

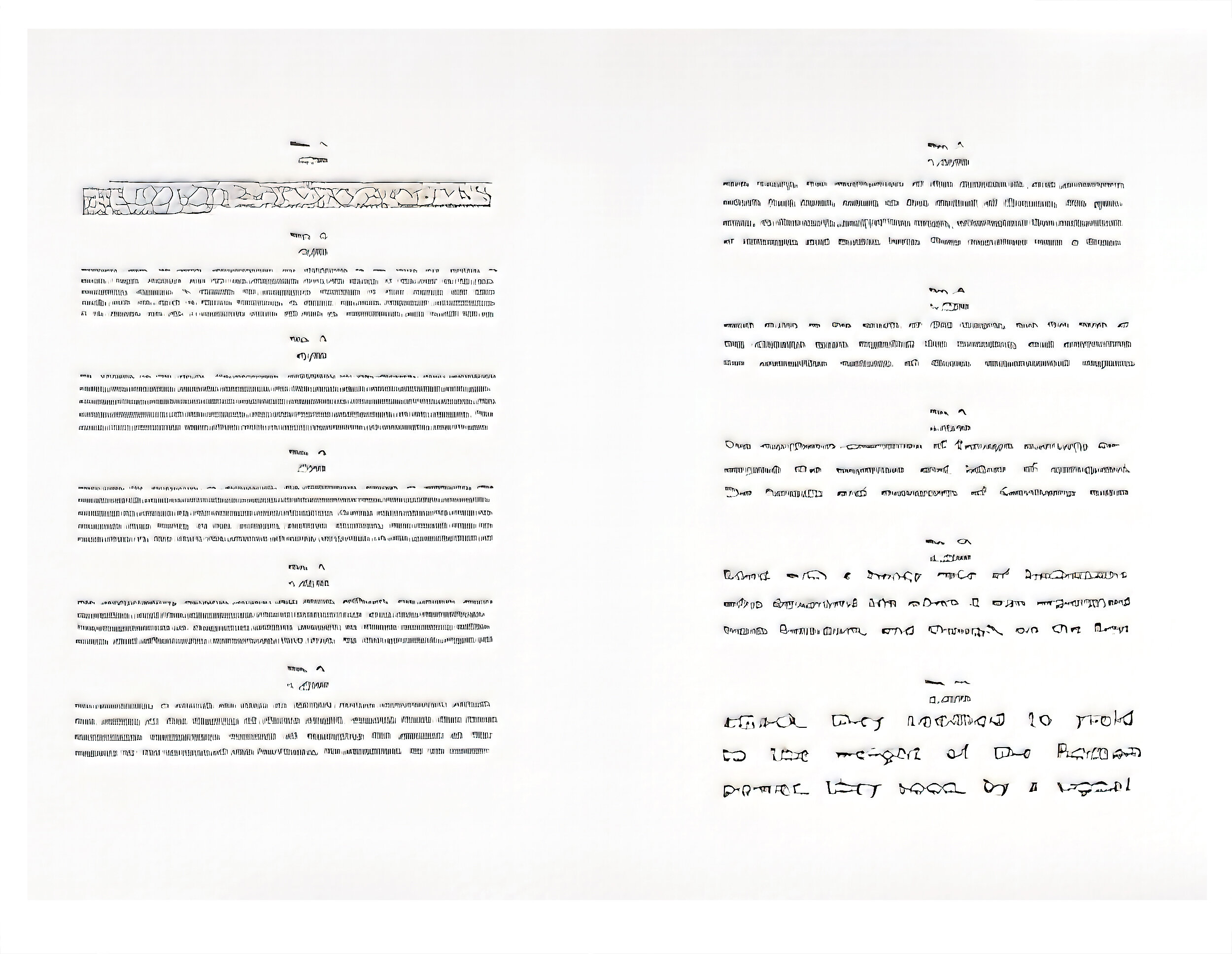

Created in the last months before widespread consumer facing generative AI models began to swarm the internet, Uncalibrated Space uses the object of the calibration target, a commonly seen ‘objective’ truth in photographic practice, to lay bare the distortions that have been carved into images through AI image upscaling algorithms, and investigates the underlying machinations of the generative machine learning systems themselves.

The work is a series of experiments utilising early AI upscaling software and training nascent image generation models against a dataset of calibration targets. When applied to excess, the upscaling software morphs and distorts the objective tool of the calibration target into something wholly alien, yet somewhat recognisable, an act mimicked by the output of video generation models trained on these targets, from noise emerges gestures of the dataset. Where does the calibration target end and the imagination of a calibration target begin?